Got Supplementals? Accepting PageRank is Only The Beginning

Nowadays, supplemental results aren’t much of a mystery anymore to most people. As Matt Cutts replied to Michael Martinez at Seattle SMX, people know all they need to do is to get more “quality links.” The answer is so simple, so easily digestible that I’m starting to see people answer supplemental threads with just three words - PageRank and duplicate content. As if.

What makes you gain weight? Eating too much. Duh. Knowing that doesn’t help you much, does it? How can you lose weight and keep it off? Knowledge isn’t power. Actionable knowledge is power.

Understanding Supplemental Results and PageRank Distribution

You have countless internal-link-based tactics at your disposal to combat supplemental results. In a thread on WMW titled Supplemental Page Count Formula?, I summarized:

If your site is largely supplemental, it means 1) not enough quality inbound links to your site 2) you have too many pages 3) you link out too much 4) Google may think your IBLs are artificial 5) Cannonical issues are causing PageRank to split.

Bouncybunny replies:

I’ve never heard points 2 + 3 being relevant for pages falling into the supplementals.

I’ll leave the discussion about PageRank leaks for another day. As for point 2, I explained (in geek speak):

Think of total PageRank X (sum of all inbound PageRank to your domain) split between Y number of pages. Roughly speaking, bigger page count = lower average PageRank per page (depending on your site structure). We know that a page with PageRank below minimum threshold “goes” supplemental. With excessively high page count, average falls too low, and you’ll end up with many pages in the supplemental index. By reducing the number of pages, you slightly increase average PageRank per url. That can result in several supp pages popping back into the main index.

As a matter of fact, Shoemoney claims he got rid of some of his supplemental results by following Aaron Wall’s advice: robots.txt disallow noisy pages.

Don’t Cut Up Your Pizza Into 60,000 slices if You Ordered a Small Pie

Thanks to Andy Beal, you can hear Matt Cutts say basically the same thing:

If you got 60,000 pages, and you only got “this much” PageRank, and you divide it […he mumbles], some of them are going to be in the supplemental index. Given “this many people” who link to you, we’re willing to include “this many” pages in the main index.

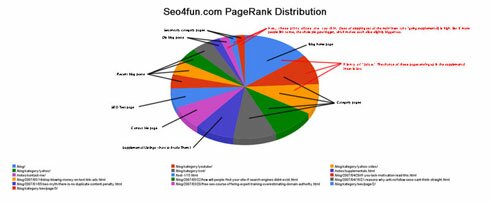

The picture below shows you how PageRank is distributed on this domain, assuming an artificial scenario where all inbound links are ignored:

(click on the thumbnail to see the details)

Aside: I generated this chart using Google Docs, Photoshop, and my Supplemental Results Detector, which a few of you guys might remember I wrote back in December 2006. It’s a simple script that emulates Google’s PageRank iteration. Though it ignores inbound links and uses the original PageRank formula where all PageRanks add up to the total number of pages on a domain instead of 1, it’s a pretty good indicator of which pages on your site is prone to go supplemental. It’s a gift-from-above for PageRank horders, but at the same time, it helps you organize your internal links more strategically.

Notice:

- More inbound links = bigger pie. As you guys link to me more often (hint hint), my pizza gets bigger, which makes all my slices grow. Phatter slices mean fewer supplemental results.

- More artificial links = smaller pie. Reciprocal links, cheap directory links, link injections,easily detectable paid links - all of these work to some extent if you play it like Sam Fisher. Uline.com ranks on the first page for “cardboard boxes”, Thisisouryear ranks first for “website directory”, and customermagnetism ranks 13th for “search engine optimization” all thanks in part to paid links. Major adult sites also dominate competitive porn terms using hundreds of thousands of reciprocal links. But having tons of artificial links pointing at your site makes them easier for Google to detect. If Google decides to devalue the PageRanks passed by those links, your large pizza turns into a medium, sometimes causing your site to enter the realm of Google Hell.

- More pages = smaller slices. As I publish more posts, I create more slices, causing all my slices to shrink. What happens to a commercial site that publishes 100,000 new pages in one day? Or what about a blogger that publishes 10 posts a day but gets completely ignored by the linkerati? They often go 99% supplemental because you’re adding a ton of more slices while the pie stays the same size. More slices mean smaller slices. And if they’re too small, they “go supplemental.” But if I publish something useful, people will link to me, increasing the size of my pie.

- Fewer pages = bigger slices. Conversely, deleting pages causes the size of my other slices to grow. It’s like a page “taking one for the team.”

Move Away from Default Wordpress Setup for Better PageRank Flow

The chart also gives you an idea of how the default Wordpress template distributes PageRank. The blog home page gets the most love; category pages are second in line; recent posts are third in line. A second page of your category pages (e.g. /category/seo/2/) and old posts have the least internal link juice flowing into them by default.

If you use the Recent Posts Plugin, your recently published post gets love from the blog home page, first page on your category and archive pages, and every single posts page. If you use install a Top Posts Plugin, you can direct extra juice (and traffic) to your favorite posts (see Jim Boykin’s blog, though the way he has it set up is kinda fugly). The Related Posts plugin can help maintain internal linkage between old posts.

Internal Linking Tactic is Half the Battle

As Adam Lasnik would probably tell you, the best cure for supplemental results is to create original, compelling content, marketing it, and getting links you deserve. But you can also get mileage out of working with the PageRank you already have. Think of it like tweaking one of your landing pages to improve your CTR. A 2% increase can add up to alot of money. Remember, people used to believe duplicate text caused supplemental results. But its duplicate urls creating more slices than you need that’s partly to blame, as Vanessa Fox recently confirmed on her blog:

Does having duplicate content cause sites to be placed there? Nope, that’s mostly an indirect effect. If you have pages that are duplicates or very similar, then your backlinks are likely distributed among those pages, so your PageRank may be more diluted than if you had one consolidated page that all the backlinks pointed to. And lower PageRank may cause pages to be supplemental.

[…] Update 6/7/07 - Halfdeck posts his analysis of the film clip, it’s worth the read on hiss explanation of Supplemental Results. […]

Supplemental Pages: Again » JLH Design Blog said this on June 7th, 2007 at 1:33 pm

Half, excellent article. I found the last part of Matt’s statement regarding the way the supplemental results are parsed and compressed the most interesting.

I’ve discussed it in detail on my own blog.

http://www.jlh-design.com/2007/06/supplemental-pages-again/

I’ve added a reference to this article as well :)

JLH said this on June 7th, 2007 at 1:36 pm

Thanks JLH.

“I’ve discussed it in detail on my own blog.”

I agree about Google’s limited resources. One indicator is how you see “PageRank not yet calculated” message in Webmaster Tools. It makes sense for Google to do a PageRank iteration on “important” urls and leave the rest for later.

Halfdeck said this on June 7th, 2007 at 2:13 pm

The PageRank Cure for Supplemental Pages

Here’s a gem of a post courtesy of Half’s SEO Notebook.

Think of total PageRank X (sum of all inbound PageRank to your domain) split between Y number of pages. Roughly speaking, bigger page count = lower average PageRank per page (depending on your…

Macalua.com said this on June 8th, 2007 at 11:47 am

Great stuff Half but as I said in an email a few days ago, “The blog is murder on my eyes”. Well I may have a solution for myself and any Firefox 2.0 users. I grabbed the Stylish add-on to build a CSS style sheet just for this. It will save the style and with the click of the mouse I’m reading Black on White. You can grab it here: https://addons.mozilla.org/en-US/firefox/addon/2108

John

John said this on June 11th, 2007 at 10:02 pm

Thanks John :)

BTW, I like the white lake world logo, and liquid design. I can see how coming from a site like that to my site might make you feel a little claustrophobic. Hey, like I said, overhauling this site’s design is on my todo list, but its way down on the totem pole since my top priority is to make money with other sites. It doesn’t mean I don’t value my readers or I got a sadistic streak; it just means I got too many things on my plate :)

Halfdeck said this on June 12th, 2007 at 1:16 am

Half, great article. I’ve always been reading that the solution to get out of Supplemental is by getting more quality links but I didn’t know why until now.

Larry Lim said this on June 14th, 2007 at 7:46 am

Thanks Larry. Hopefully my explanation will help at least a few people get more traffic. With all the time people put into building a great site, they deserve it.

Halfdeck said this on June 14th, 2007 at 9:46 am

[…] Everything SEO and internet marketing related is found here. When I’m reading this blog I almost feel like I’m listening to a professor at school, not because it’s boring at all, but because he has so much knowledge. I don’t think I even know where to start pointing you, but his latest article is VERY informational article on understanding PageRank distribution . Another post of his that I actually read often is “If You Lack Motivation, Read This“. It’s an awesome collection of motivational quotes that keeps me on track from time to time. Bookmark to: […]

Free Link Friday!: 15 June 2007 | Cody Staub said this on June 15th, 2007 at 11:31 am

[…] Not all pages within Sphinn need to be indexed. By preventing Page Rank from flowing to these noisy pages, more link juice will flow to the most important pages. Google will not put pages in it’s main index if they don’t have enough quality links pointing to them. Think of it like a pizza. The more slices you cut, the smaller each slice becomes. By only allowing search engines to count the links you want them to, those remaining pages have the best chance to get and stay indexed. They effectively become larger slices. […]

5 Easy Ways to Make Sphinn SE Friendly » SEM.co.nz said this on November 14th, 2007 at 4:09 am

Halfdeck,

I’ve used a lot of your techniques to improve the internal likages on WP blogsite…and now I’m wondering if I decide that a supplemental content page is really a “noisy page” should I just delete the page and 404 to Google….or should I delete and 301 redirect maybe to the pages former Category…or should I modify the text, title, url, and update the date/time stamp to today, so Google sees it as a new post, redirecting the old url to the new?

Rob Blake said this on November 30th, 2007 at 6:35 pm

Rob,

You don’t need to 301 redirect unless a post has backlinks. To make Google think a post is new, just modify the content of a post. If a blog post isn’t important to you or your readers, I would just delete the post and issue a 404/410.

Halfdeck said this on December 5th, 2007 at 4:13 am