How Google Failed to Hide Supplemental Results

If you’re an SEO with clients that are worried about supplemental results, your job just got a whole lot harder. It’s like having a patient dying of disease showing no visible symptoms. Not only does he believe he isn’t sick anymore, but you can’t tell what he’s sick of.

First, your clients should know that just because they don’t see the supplemental results label anymore, it doesn’t mean their worries are over. Their supplemental pages are still supplemental. Google is just trying to hide the fact.

They should also know that just because the label is gone doesn’t mean you can’t detect supplemental results. You can, and here’s how:

- site:www.domain.com/& hack, which seems to pull up urls that used to be labeled supplemental. Of course now that Danny Sullivan blogged about it, that hack probably won’t last another week. (UPDATE: The hack was covered last week, according to Danny, the same week I pulled almost all my SEO feeds from Google Reader so I’m not bombarded by SEO news. Bad timing, I guess)

- site:www.domain.com/* shows pages in the main index.

- Old cache date. If s page’s cache date is old, its a sign that the page may be supplemental. Why? Because Google doesn’t refresh a supplemental result’s cache all that often. For example, my blog’s main urls have cache dates ranging from Jul 25~26, 2007 (today’s date: Aug 1, 2007) while my old supplemental pages have cache dates as old as Jul 6-7, 2007.

- Low-to-none competitive term traffic. If you’re not getting Google hits for two-word queries or getting no traffic at all to a specific URL, it may be supplemental.

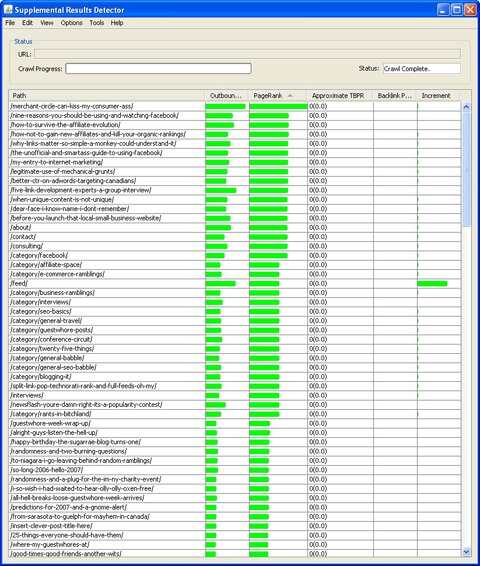

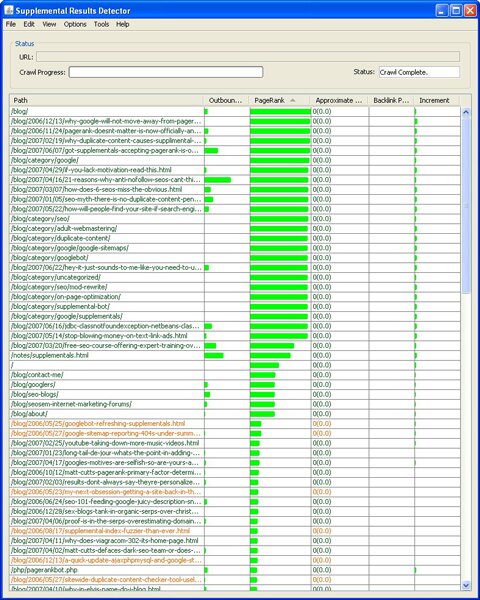

- Uneven PageRank distribution, which you can control by downloading the Supplemental Results Detector Tool. See how sugarrae.com and seo4fun.com distributes PageRank?

See how sugarrae’s PageRank distribution is pretty even, so that there isn’t a huge gap between the home page and the deep pages? The site is 99% supplemental results free. Yeah, its a high TBPR site with only ~100 pages (which means plenty of PageRank to go around for each page) but so is vanessafox.com (TBPR 7 with ~100 pages), which has more supplemental results than sugarrae.com.

(orange urls are supplemental)

In contrast, seo4fun.com concentrates PageRank on just a handful of pages while the rest of the site gets very little attention. Consequently, some of the urls near the bottom of the chart with low link popularity are supplemental. - Low Toolbar PageRank (0 ~ 3). The toolbar is a weak indicator due to delay but more green generally means less chance of a page being supplemental.

TANGENT:

(One interesting tidbit I found in the recent Google blog post is Matt/Prashanth Koppula saying a url with complicated query strings also might go supplemental. At this point (considering the fact that Dave said stale pages go supplemental as well) it’s probably safe to assume a myriad of minor factors are involved)

(After reading the post, reasons why Google likes supplemental results are pretty clear:

1. Crawl the web more fully to serve ~1000 results (or maybe Google’s satisfied with just 10-100) for every possible search query, which means a) a happier user and b) more pages to display AdWords on.

2. Improve efficiency by taking advantage of prioritized crawling: crawl important, frequently updated pages more often while crawl less important, unupdated pages less frequently. Unfortunately, this often means only home page/top-level nav pages get indexed while pages with actual content fails to make it into the main index. I often get frustrated by a search result that lands me on a blog category page with 40+ blog post links instead of the blog post itself.)

Wrapping up:

A site with many pages in the main index receive traffic for competitive two-word queries. Traffic land on not just a handful of pages but on thousands of pages. Googlers promise that, by the end of the summer, supplemental results will generate more traffic and will rank for more terms. We’ll see. There are a ton of spam pages in the supplemental index, so Google will have to walk a thin line - otherwise odd query terms will be swamped with low PageRank spam while legitimate supplemental results never see the light of day.

Blogging about the hack happened last week, after it came out at WebmasterWorld. Fair to say, Google has known about it. More to the point, the hack doesn’t help if they take away the supplemental label.

Danny Sullivan said this on August 1st, 2007 at 4:02 am

“Blogging about the hack happened last week, after it came out at WebmasterWorld.”

The same week I pulled 200+ SEO blog feeds from Google Reader so I can actually get some work done… go figure. The hack still shows pages that used to be labeled supplemental, so I disagree. It does help.

Halfdeck said this on August 1st, 2007 at 10:18 am

For as long as they leave the hack there….

My feeling is that they [Google] would rather have less tinkering around by webmasters, seo’s etc. Unortunately with Google’s growth comes the corresponding additional competition to get on top and the rewards that brings.

I like the tool Half - only thing I sorta like from the PHP version was numeric values for PR. I find the bar images less useful actually. But that’s just me :)

Rgds

Richard

Richard Hearne said this on August 4th, 2007 at 2:25 am

Hey, thanks for the feedback Richard.

Regarding the old PageRank numbers - mousing over the graphics bar displays an approximation of the numbers used. The thing is I switched over from the original PageRank algorithm, where the total domain PageRank added up to the total number of pages (e.g. a 100 page site would have a max total PageRank of 100) to a modified algorithm Michael Martinez mentioned, where all webpages’ PageRanks add up to one. So the numbers you see are way smaller than what you used to see in the PHP version.

I agree Googlers don’t like seeing SEOs and webmasters fixate on twinkering with stuff like numbers of links per page instead of focusing on what really matters most: Building a good website. But its kinda like playing good music: you can’t just have passion and a good score - if you can’t nail the technical stuff (playing notes in the right pitch, playing in rhythm) then it won’t sound like music. Optimizing a site for supplemental results isn’t like buying links or cloaking - as long as it doesn’t take up 90% of a webmasters’ time I don’t see any harm in it.

Halfdeck said this on August 4th, 2007 at 7:08 am

Halfdeck, thanks a lot for this post and other insightful posts on the topic… been playing with your “pagerank simulator” and it REALLY rocks to simulate what another PR6 links would/could do to my site… obivously it misses all the aspects of “topical pagerank”, but that’s not what it was built for - and it already IS a pretty cool app.

all the best

christoph c. cemper

Christoph C. Cemper said this on August 5th, 2007 at 9:57 am

Hey Christoph,

I’m glad you like it.

Not much else to say so here’s a random thought:

Some SEO consultants are apparently glad they don’t have to worry about clients wanting them to solve supplemental issues anymore. Why? Unlike dealing with ranking penalties and such, supplemental results are something you have some control over. It takes 3 days or less to turn a supplemental result into a “main index result.” That’s much easier to do than getting rid of a -X penalty.

Mike Grehan’s school of online marketing (SEO = dead, traffic = good marketing) is churning out some SEOs who seems to either have an aversion to anything technical or don’t understand that building a good product comes before marketing.

Halfdeck said this on August 6th, 2007 at 10:02 am

I was amazed today although i didn’t talk to my webmaster yet but what i saw that my site was out of supplemental and not only mine but others also. I just got amazed as what is the reason. So i searched over net and finally got result that they have removed that sup. res. from google.

Now how will i know which page google is reading and which not??

Vinayak said this on August 7th, 2007 at 1:39 pm

Halfdeck I always enjoy your take and the tool is looking great. I have a couple of questions to email you though.

I have it installed but the URLs aren’t coming out color coded and 60% are showing a status 405. I ran it on the site shown on my link here.

Thanks Again,

John said this on August 7th, 2007 at 10:54 pm

Hey John,

405 means timeout (server for one reason or another is too slow to respond).

For example, this url gives an error:

http://www.missionbibles.com/bible/category/

Look at the TITLE: “Error 404″

HTTP Header check returns a 404. You need to fix that.

To see what internal URLs are linking to it, go to View > Filters > Link Sources

The urls aren’t meant to come out color coded. I’m gettin’ ready to publish a how to on the tool, so hang on :)

Halfdeck said this on August 7th, 2007 at 11:31 pm