Third Level Push (modified Siloing) For Deeper Index Penetration

Third-Level Push (aka “siloing”), according to Dan Thies (who regained my attention after his recent article on Google proxy hacking), helps you get third-tier pages (e.g. article/product detail pages) in the main index and ranking higher by “taking more of the PageRank from your second tier, and pushing it down into the third tier.”

Dan explains:

In most sites, your global navigation links to the entire second tier from every page, including the home page. This causes the second tier pages to accumulate a lot of PageRank, at the expense of your third tier.

Makes perfect sense. Sites with slightly low link popularity (home page TBPR 3-4) often have no problem getting the home page and most of the category pages in the main index, but they often can’t get some of the product detail pages to stick. Why? Often its because of exactly what Dan said: the internal navigation makes the home page and second-level pages PageRank-hogs, leaving the third-level pages high and dry.

Some SEOs call Dan’s tactic “siloing”, and attribute its benefits to better themed internal linking. For example, Haylie from Bruce Clay talks about siloing, albeit with a focus on ranking, not index penetration. Siloing, in this case, is done by setting up thematic pyramids via links or directory structure. Just imagine a tree hierarchy, where leaf nodes link up to their parent, then a set of parents link up to their parent, and so on, till you reach the root node.

Dan disagrees: “At the time we all assumed this had something to do with the topics of the pages not being closely related, but we were wrong.” According to him, increase in site traffic is due to increase in PageRanks at the third-tier.

So how do you implement Third-Level Push? In brief:

1. Use nofollow to prevent second-level pages from passing PageRank to each other. This forces PageRank downwards to the third-level.

2. Use nofollow on links on third-level pages to second-level pages so that a third-level page passes PageRank to its parent page but not to any other pages in the second-tier.

3. Tiered Pairing: To prevent second-level pages from losing too much PageRank, you can link them in pairs: e.g. page A with B, C with D, and so on.

4. Circular Navigation: To circulate more PageRank on the leaf level, link them up in circular faction, so page A links to B and C, B links to C and D, etc.

That’s third-level push in a nutshell.

Note: Avoid deleting or adding links to do this (like I did); instead, just use nofollow. There’s no bigger sin than compromising user-experience for the sake of SEO (well, there probably is, but lets not get into that).

A Third Level Push Implementation for Wordpress

Does third-level push really work? I decided to use this blog as a guinea pig. But how do I implement third-level push on Wordpress? Sure, you can just Google for a “SEO Siloing” Wordpress Plugin, but that’s no fun. Warning to the faint of heart: don’t try this at home:

1. open template-functions-category.php

2. find function wp_list_cats($args = ”) declaration.

3. around line 236, under parse_str($args, $r): enter:

if ( !isset($r[’nofollow’]))

$r[’nofollow’] = FALSE;

// That sets the default nofollow value, in case no value is passed.

4. Look for the return list_cats.. line.

5. At the end of the long argument list (after $r[’heirarchical’]), type $r[’nofollow’]

6. Find function list_cats(…..).

5. At the end of the function declaration argument list @line 279, after $hierarchical=FALSE, type: “, $nofollow=FALSE”

6. Now look for the A HREF echo statement, around line 327.

7. Replace $link = ‘<a href=”‘.get_category_link($category->cat_ID).’” ‘; with:

if($nofollow==FALSE) $link = ‘<a href=”‘.get_category_link($category->cat_ID).’” ‘;

else $link = ‘<a href=”‘.get_category_link($category->cat_ID).’” rel=”nofollow” ‘;

8. Finally, open sidebar.php. Look for the wp_list_cats line for single posts (not the home page), around line # 104, that looks like: wp_list_cats(’sort_column=name&optioncount=1&hierarchical=0′);

Replace that with wp_list_cats(’sort_column=name&optioncount=1&hierarchical=0&nofollow=TRUE’);

That’s it.

Potential negative side effects: If your blog doesn’t have a lot of backlinks, your category pages might go supplemental. In that case, try Tiered Pairing.

UPDATE: Joost apparently incorporated my idea into his Robots Meta Plugin. Check it out.

Does Siloing/Third-Level Push Really Work?

So what happens to PageRank flow after I implement a third-level push?

Here’s a before and after:

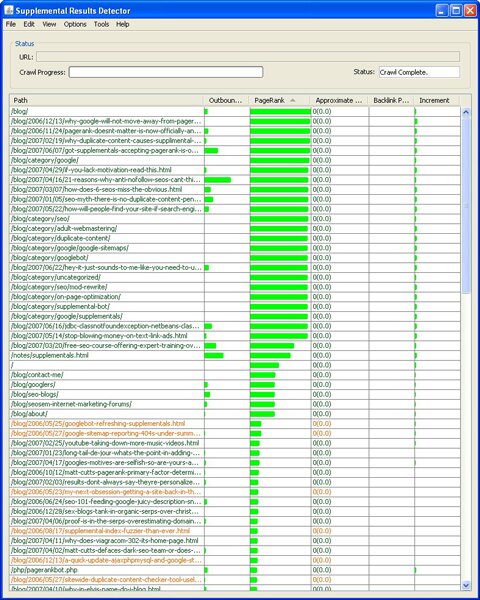

Before:

(Pages in the main index are green. Notice I channeled most of my site’s PageRanks to only those URLs I want to rank, so I had some supplemental URLS but none of them I cared about.)

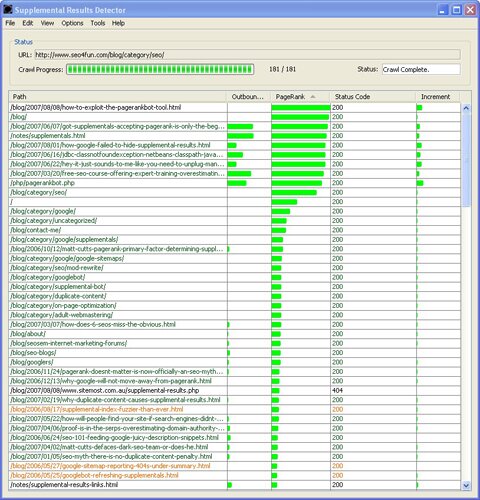

After:

Hmm…so basically I lost PageRank to some of my unpopular category pages. But where did all that PageRank go? To just a handful of recently-published posts, which had high PageRanks to begin with. So it doesn’t really look like I gained anything, does it? In fact, it looks to me like a whole bunch of pages might go supplemental.

See, there’s no point in having pages with too much PageRank (at least for getting pages indexed). You want a moderate amount of PageRank on as many pages as possible.

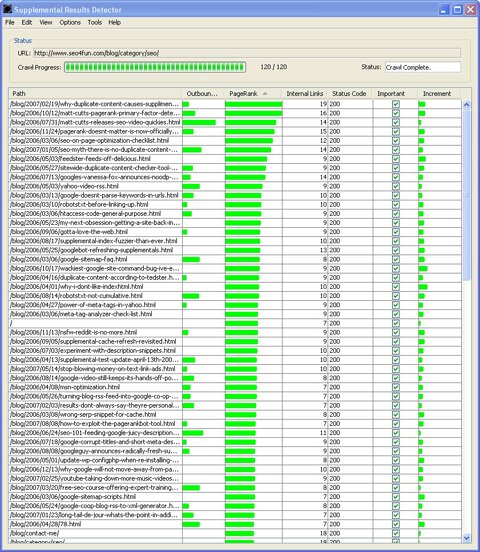

Nah, instead I want something more like this:

Notice now PageRanks are more evenly spread throughout my site.

(screenshots generated by PageRankBot).

Sitewides’ Gotta Go (Modified Third-Level Push)

The problem was I had other sitewide links besides links to category pages, like “recent posts”, “top posts”, and links to the home page. Those URLs stole the PageRanks the category pages gave up.

In short, sitewide links are bad. So what did I do?

1. Dumped sitewide links: Got rid of sitewide links to recent posts and top posts (better to nofollow them but I was in a rush) and nofollowed links to the blog home page. Used third-level push (nofollowed sitewide links to category pages), except I prevented blog articles from linking back to its parent category page to keep the page from accumulating too much PageRank.

2. Added related posts plugin to circulate PageRanks to internal pages “randomly” instead of sitewide.

Aside: Some SEOs will tell you you should never, ever, ever nofollow links to your internal pages because it sends a negative quality signal to Google. First, Vanessa Fox, an ex-Googler, confirmed that a reason nofollowing internal links may be a bad idea is because the target urls will still be indexed if other people link to them (not that I take her word blindly as Gospel but hey, I don’t have time to test everything a Googler says). My policy is to use nofollow on internal links only when I want to control the amount of PageRanks flowing into a URL but I still want to keep the URL in the main index. For example, I wouldn’t nofollow a link to my privacy policy or TOS; I would just use robots.txt disallow (sure, robots.txt doesn’t guarantee that a URL stays out of the main index, but I don’t care about that; I just don’t want internal link juice to flow to my TOS page. But if you really wanted to get rid of a URL from Google’s index, use META noindex instead of robots.txt).

Conclusion

Will this setup help me or hurt me? Time will tell. The main problem is that now my site’s PageRanks are unfocused; my top posts aren’t getting any special attention. I can modify Wordpress so that X% of links to top posts are nofollowed instead of nofollowing every single sitewide link. That way, my most important pages will have the highest PageRanks but they won’t be PageRank hogs.

I do believe that third-level push can work, as long as you have some kind of tool to make sure PageRanks are actually being pushed down to the third-tier pages. Just by nofollowing links to category pages won’t guarantee that, though preventing sitewide links from flowing juice will probably do the trick.

Why should you care about this stuff?

As Matt Cutts recently explained (emphasis mine):

You could do a similar post with a bunch of Play-Doh and show how you have a certain amount of Play-Doh (your PageRank), and you choose with your internal linking how to spread that Play-Doh throughout your site. If a given page has enough PageRank (reasonable-sized ball of Play-Doh), it can be in our main web index. If it has not-very-much PageRank (tiny ball of Play-Doh), it might be a supplemental result. And if only a miniscule iota of Play-Doh makes it to a page, then we might not get a chance to crawl that page.

The Play-Doh / PageRank metaphor is kinda disturbing, but hey, whatever works.

You are creating too much work for yourself editing core files and also still have a ball linking structure in your 2nd tier.

It is much better to use the add_link_attribute plugin for sidebar elements

One gotcha for many people is that they will try to combine this with blocking off their archive pages from indexing, thus reducing the number of internal links per page.

The end result is they will create a great sacrificial linking structure.

Did you see my Sandcastles linking structure?

I need to get your tools working and finish up my optimization (and get the final theme published), when I tried your PHP script the other day it barfed after 2 hours, so I will give the javascript version a shot.

I did notice that for some reason the php script was not assigning nofollow to the links from my antisocial plugin, where the image links to the social bookmark sites are nofollowed.

Andy Beard said this on August 23rd, 2007 at 3:29 am

“You are creating too much work for yourself editing core files”

Not really Andy. It took me a couple minutes to hack that (though it took a bit longer to write the solution out in this post). I’ll check out the plugin though; thanks for mentioning it.

“also still have a ball linking structure in your 2nd tier.”

Ok…according to Leslie Rohde’s “Dynamic Linking”, a ball linking structure is basically a structure where all pages link to each other:

“the sub-pages will … therefore not need as high a PR value, nor as much Link Reputation. It’s our home page that needs the most links, but our linking strategy has placed it on a par with our internal pages.”

You’re right. Links on my category pages aren’t nofollowed, but still the impact is minimal because now I only have the home page linking into those pages.

I disagree with Leslie though; when dealing with supplemental results, the sub-pages need as much PageRank as you can afford to give them.

“One gotcha for many people is that they will try to combine this with blocking off their archive pages from indexing, thus reducing the number of internal links per page.”

Good point. That can lead to more PageRank bleed.

“Did you see my Sandcastles linking structure?” Yes. I can’t give you my take on it till I re-read your post a couple times though :)

I tested my tool’s Java version using several SEO blogs, including yours I think. Unlike the PHP version, it reads robots.txt, META noindex tags, and handles 301 redirects. Please let me know if the antisocial plugin nofollows are still not being picked up.

Halfdeck said this on August 23rd, 2007 at 6:28 am

I don’t have my site using the sandcastles structure fully, plus you have to allow for how many dofollow comments and trackbacks I have to cope with ;)

I don’t think you are really disagreeing with Leslie

At the category and date archive level you are creating a Campbell Mini-ball

Then you are channelling down to single pages from both, and then channelling back up through the data archives.

With no incoming or outgoing link you are concentrating PR in your date archives.

We now introduce some links coming in, and some external links on each page.

I have used the same number of content pages as I used in my own modelling although I do benefit from more total pages due to the tagging.

So lets be fair and add in some more pages as dummies

The additional 9 pages do not have incoming or outgoing links just like my tag pages, so offer a similar (though not exact) benefit.

You will see that you don’t have quite as much pagerank concentrated in your single pages, and far too much in your date archives.

Here is a version of Sandcastles to compare, but this one I have removed the sitemap so it has an identical number of pages.

On the plus side, if you reversed the role of the category and date archives, you would end up with some fairly powerful categories and a lot more relevance being passed.

I know this is all theory

One of the advantages of doing everything with template changes and plugins is you can have multiple themes and switch between them to then use your tool.

I am going to have to get your tool up and running now, plus finish an article linking through.

I need to test out what Sebastian is doing as it wasn’t very clear - I really enjoy this kind of research.

Andy Beard said this on August 23rd, 2007 at 11:38 am

[…] Halfdeck discusses SEO for Fun, and in my experience he only writes useful unique content. He uses his blog as a testbed for linking structures and also provides a tool for linking structure analysis. […]

Pushing WordPress SEO Boundaries | Andy Beard - Niche Marketing said this on August 23rd, 2007 at 2:41 pm

“At the category … level you are creating a Campbell Mini-ball”

Right, I overlooked dealing with the category pages.

“With no incoming or outgoing link you are concentrating PR in your date archives.”

All my archive pages (save two) were robots.txt disallowed though. Now I updated robots.txt to disallow them all, So PageRank should be “trapped” at the third-level. I used allow/regexp in my robots.txt which I think PageRankBot choked on. Since there are a couple of backlinks to those archive pages, I might remove the disallows and nofollow them instead.

If you look at the third screenshot (which now reflects reality more closely), you’ll notice there’s no PageRank concentration. The URLs you see there (except the last URL - the SEO category page, and the domain home page) are single post pages. The tactic I used was linking semi-randomly at the third-level, and completely eliminating sitewide links.

One problem I created, like you said, is not having enough internal links per page. Ideally, I want to have no sitewides but have as many internal links as possible per page (up to 100-150).

Another problem, like I mentioned, is lack of focus. But if I use a random function to randonmly remove nofollows on top post links, I can “staccato” my most important pages. It might sound a little extreme, but the beauty of that is it gives me more control over PageRank distribution while I avoid compromising user-experience.

I noticed Sebastian’s post as well, which made me kinda wish I had powerpoint. I gotta admit I do enjoy reading about “under-the-hood” stuff.

It took me a while to decypher the PageRank calculator grid. If I understand it correctly, with the Sandcastles approach, you have only the tag pages linking back up to the home page, category pages don’t crosslink with each other, and none of the content pages link back up to the category pages. The home page gets some weighted attention due to alot of links linking into the tag pages and the tag pages linking up to the home page, but the set up is much more controlled than having every page on the domain linking back up to the home page. It makes the default Wordpress look like a broken template.

I actually started looking into this after suggesting third-level push to a client having supplemental problems, to make sure I wasn’t steering him wrong, and to have a post he (or his programmer) can read which explained internal link structure in more detail. I ended up learning alot in the process.

Halfdeck said this on August 23rd, 2007 at 7:18 pm

I missed that robots.txt hmm…

Even if you don’t factor in any discounting of sidebar links, you really need another 10 or even 20 internal links on this page.

You have 4 external links, I would aim to have 20 internal

Sandcastles seems complicated, but it can be achieved with just a few plugins and fairly simple theme changes.

I have no idea how Google handle pages with massive amounts of links . My page on dofollow plugins is just crazy now with 200 external links, and a huge growing tag cloud at the bottom.

Andy Beard said this on August 24th, 2007 at 7:23 am

“you really need another 10 or even 20 internal links on this page.”

Yeah, I do. I’m actually more worried about my client’s site (which is commercial with very few outbounds), but I’ll have to come up with a solution to that.

Halfdeck said this on August 25th, 2007 at 9:42 am

Hey, man, I really appreciate not just this post, but the tool and the other post on how to use it. Going to send that out in the next newsletter. It’s not a Digg effect but you should see a few hundred downloads pretty fast. :D

I’ll have to go back in and make sure that my description of the 3rd-level-push idea is completely accurate in the book. I’m going to be really mad at myself if I screwed that up…

With only 129 pages (I didn’t spider, just Googled site: so that may be way off the mark) your blog isn’t much of a candidate for any of Leslie’s schemes (you may even do better SEO-wise with 100% cross-linking), but it’s fun to play with this stuff anyway.

One of the problems with analyzing things internally only, is that it actually does matter where PageRank comes in, and how much. The “one way valve” strategy (home page has one link to a site map which links into the rest of the site) looks OK if you only do internal analysis, because you added a page, so there’s more total PageRank.

When you actually do the math on what happens after you push PageRank (external inbound links) into that single-link home page, then you realize that you’d have been better off pushing PageRank directly into the sitemap instead of losing some at the “home page,” because it doesn’t all make it through the one-way valve. Which basically means you might as well make your home page look like a home page.

Dan Thies said this on August 29th, 2007 at 3:39 pm

Thanks Dan. Your e-book was a refreshing read. Now if only I can convince one of my clients that using nofollow on internal links won’t get his site in trouble. Rand Fishkin just published a Matt Cutts interview where Rand says:

“Nofollow is now, officially, a “tool” that power users and webmasters should be employing on their sites as a way to control the flow of link juice and point it in the very best directions.”

Interesting times.

“With only 129 pages (I didn’t spider, just Googled site: so that may be way off the mark)”

Right. Originally I had around 200 pages but I seeing what happens if I trim down. The site I’m being paid to work on involves around 2000 pages.

“One of the problems with analyzing things internally only, is that it actually does matter where PageRank comes in, and how much.”

I agree. With my tool you have an option to compensate for that by simulating backlinks to a specific page, but its far from accurate. Honestly, I’m not exactly sure how to go about simulating that. It makes a huge difference where the entry points are. For example, with this domain I only have a few backlinks to my root URL; most of my backlinks either point to my blog home page or to individual blog posts. A commercial site might have 99% of its backlinks pointing at the home page. Like you said, things like that make a huge difference as to how PageRank flows internally.

Halfdeck said this on August 30th, 2007 at 3:45 am

Nice. Finally. They’ve really been “unofficially” saying that about nofollow for a long time. Maybe I can catch a break now. I have been called all kinds of names for even talking about using nofollow to manipulate PageRank internally… so much that I actually had to explain why it’s good for the web and not a “black hat” technique.

As long as Google considers PageRank important, it will be important to understand and use that knowledge to get more of your important content into the index. I have students “discover” that they actually belonged on the top of SERPs after we did some basic internal linking changes - with no real link building campaign at all.

Your client is a good example - 2000 pages, and I’d guess probably 1/4 actually indexed? You can get that whole site indexed with the right structure and a relatively small amount of incoming links.

Dan Thies said this on August 30th, 2007 at 9:41 am

“Nice. Finally.”

Since alot of SEOs still look cross-eyed at nofollow and PageRank, I doubt either of them will catch on at the grass-roots level just yet. Too many SEO-related ideas are irrational and emotional, not tactical. I’m glad to see Rand Fiskin coming around though.

“I’d guess probably 1/4 actually indexed?”

Even less than that. His second-tier pages are TBPR 4s, as is his home page. He’s got a relatively decent link profile; he only links out editorially, and he’s got a handful of Yahoo! Directory links. Many of his landing pages rank on the front page of Google. He just needs a few more pages in the main index.

Halfdeck said this on August 30th, 2007 at 7:02 pm

Believe it or not, even after reading the exact words, some of these people STILL don’t get it. I think I’m going to collect all their objections up and ask Matt for a 1-question email interview: “Matt, are any of the following even remotely true?”

Dan Thies said this on August 30th, 2007 at 9:55 pm

It is similar to the supplemental results being cause by duplicate content situation a few months ago and Matt having to debunk it.

Googlers had already been saying it was lack of pagerank, which could be the result of duplicate content but not necessarily.

Andy Beard said this on August 31st, 2007 at 3:31 pm

“It is similar to the supplemental results being cause by duplicate content situation a few months ago and Matt having to debunk it.”

This all circles back to John Andrews’ recent post (with an “seo-whiners” slug): “when Google talks, listen up. Google is practically telling you how to rank.”

Here’s Eric Enge’s take.

Halfdeck said this on August 31st, 2007 at 5:57 pm

[…] He even used it to simulate a '3rd level push' - sort of (I don't think he cut the links from the second tier to the home page and left the sitewide links in place), and simply by playing around realized that the "sitewide" links were holding him back from getting more PageRank deeer into the site. It would take you a lot of time to do that without a tool - with it, he sorted out a better PageRank distribution in an afternoon. […]

How To Get More Pages Indexed With Nofollow said this on September 4th, 2007 at 6:51 pm

Yeah I read that piece from Eric, but it doesn’t take into account that many people have been doing this for years, and so do Ebay with epinions and rent.com

Andy Beard said this on September 5th, 2007 at 10:30 am

“1. Use nofollow to prevent second-level pages from passing PageRank to each other. This forces PageRank downwards to the third-level.”

This prevents crawlers from following those sibling links on the second tier, hence reducing the frequency of crawl for those more important pages (as well as decreasing their chances of being indexed).

“2. Use nofollow on links on third-level pages to second-level pages so that a third-level page passes PageRank to its parent page but not to any other pages in the second-tier.”

This prevents crawlers from following links to those aunt and uncle page, thus reducing the frequency of crawl for those more important pages (as well as decreasing their chances of being indexed).

“3. Tiered Pairing: To prevent second-level pages from losing too much PageRank, you can link them in pairs: e.g. page A with B, C with D, and so on.”

This reduces the number of followed links on the second tier, thus reducing the frequency of crawl for those more important pages (as well as decreasing their chances of being indexed).

“4. Circular Navigation: To circulate more PageRank on the leaf level, link them up in circular faction, so page A links to B and C, B links to C and D, etc.”

That has always worked better than just having leaf pages link back to their parents. Why? Because it increases the number of inbound links to each page.

NOFOLLOW is not about PageRank, it’s about pretending a link doesn’t exist. Matt Cutts says that Google won’t follow the link if it’s NOFOLLOWed, and that means the chances of an important page being crawled and indexed are reduce if you use “rel=’nofollow’”.

This kind of bad architectural design is not a substitute for strong internal linkage. People need to understand that when they turn on NOFOLLOW they turn off crawling and link anchor text, not just PageRank.

Michael Martinez said this on September 6th, 2007 at 7:38 pm

“People need to understand that when they turn on NOFOLLOW they turn off crawling and link anchor text, not just PageRank.”

Michael, good point about nofollow turning off link anchor text. That’s a pitfall. If a website’s second-tier pages are ranking well for keywords in the internal anchor text, sitewide nofollow will obliterate those rankings. That’s why I proposed a partial nofollow. Also, if I manage to gain deeper index penetration from this tactic, that’s more anchor text than if I had only a few of my pages in the main index.

What to you is good architectural design?

“that means the chances of an important page being crawled and indexed are reduced if you use “rel=’nofollow’”

I’ve never seen Google miss a crawlable page on any of my domains. In fact, the click-path from the home page to a leaf node is shortened when you cut out the middle-man, so I’d expect leaf nodes to be crawled more often. If PageRank is the main determinant of crawl depth/frequency, then sending more PageRank to the leaf nodes might encourage Googlebot to crawl them more often. Also bots can’t tell a third-tier page from an uncle page. They’re all links on Googlebot’s crawl queue waiting to be crawled. Lastly, the only time I expect bots have trouble discovering URLs is if there’s only one path to a URL and that URL is the only one linking to another URL, and so on, in a continuous chain.

Halfdeck said this on September 6th, 2007 at 8:26 pm

[…] After reading Halfdeck’s post about Third Level Push, which Roy pointed me at, I decided to add the necessary code to the Robots Meta plugin, since the code example Halfdeck gave was for an older version of WordPress (the wp_list_cats function he uses was deprecated in WordPress 2.1). […]

More WordPress SEO: robots-meta update - SEO Blog - Joost de Valk said this on September 9th, 2007 at 7:11 am

“What to you is good architectural design?”

The more internal linkage, the better. The more internal links you have pointing to pages, the sooner and more often they are crawled and indexed.

“I’ve never seen Google miss a crawlable page on any of my domains.”

I have.

However, you and Dan have both missed a major point in all this. PageRank may be driving the crawling and the indexing (two different actions), but a crawlable link has to be found before the page on the other end is even queued for crawling. And that is just as important after the page has been indexed as before it is indexed.

When Google goes through a major data dump as it just did in August, the more infernal links you have the less stress your site undergoes in the dumping process. Also, the more internal links you have the sooner you get your pages recrawled and reindexed.

For a typical small business Web site, there are no unimportant pages. Search engine optimization is not about favoring one page over another. It’s about optimizing every page for search.

People who follow this bad advice about using nofollow on their own pages will not realize any SEO benefit. They may get a few pages crawled more often but they could easily achieve that without resorting to nofollow.

Michael Martinez said this on September 10th, 2007 at 3:21 pm

“the more internal links you have the less stress your site undergoes in the dumping process.”

Agreed. But I’m not talking about blocking a ton of internal links here; just 4~5 category links that, on a blog, I find pretty useless to begin with.

“For a typical small business Web site, there are no unimportant pages.”

Michael, that happens to be true for one site I’m being paid to work on, but Dan made a good point that affiliate marketing sites usually don’t bother nailing top positions with their category pages. I rather rank on the first page with 10,000 product pages than with 10-20 category pages. On my blog, in particular, there are some old posts I wrote that aren’t valuable to me at all. I don’t mind shoving them into the supplemental index.

“They may get a few pages crawled more often but they could easily achieve that without resorting to nofollow.”

I don’t need every single page linking indiscriminately to a navigational URL (e.g. home page). True, you can avoid that scenario without using nofollow (e.g. Andy Beard’s Sandcastles). Still, when you have 1,000 sitewide links to the home page, adding a nofollow to 2-3 of them isn’t going to kill your site either.

Halfdeck said this on September 11th, 2007 at 5:51 pm

I have 2 websites that I have been using the tool on. One site works perfectly, the other (Real Estate Site) causes the tool to lock up and freeze at 4497/4507 pages. Is there something in the site that causes the tool to not continue?

Robert Earl said this on September 13th, 2007 at 8:00 pm

Robert, you can 1) try downloading the most up to date version of my tool, 2) run your site through Xenu first (sometimes ill-formed relative links can lock the tool in an infinite loop), run it in DOS and email me the screenshot of error messages you see.

Halfdeck said this on September 13th, 2007 at 9:55 pm

What a great tool!!!! - It works perfectly. It shows the entire site and what is going on. Thank you!!!!

Robert Earl said this on September 15th, 2007 at 1:51 pm

Seems like a lot of work for a strategy that is unusual and unproven at best. IMHO, good linking between posts (esp the related articles plugin for wordpress) will help get your deeper content indexed. Those links will go to your third level straight off the homepage (when the posts are new anyways) and then to other third level material.

Reminds me of newbies talking about pagerank hoarding, except you’re obviously not a newb and what you’re saying is clearly sophisticated. So I’m not sure what to make of this, but I’m not convinced, with all due respect. Look forward to reading if it got you any results!

Gabriel Goldenberg said this on October 1st, 2007 at 1:42 pm

Yo friggin’ halfdeck, do you have an email address or are you as secretive as Sebastian? I want to know what you know, please email me at admin [@] seobuzzbox.com , got a question for you!

Thanks,

Aaron

SEO Buzz Box said this on October 3rd, 2007 at 7:58 pm

“I want to know what you know”

Like they say, it’s all in the wrist, not in your head :) You got my email.

Halfdeck said this on October 5th, 2007 at 2:24 pm

“Seems like a lot of work for a strategy that is unusual and unproven at best.”

Hi Gabriel,

If you read Matt Cutts’ recent interview with Eric Enge, you’ll learn, as I did, that robots.txt and META noindex are pretty much useless when “sculpting” PageRank. So you have a few choices: don’t link to “untargeted” pages, like TOS/about me/privacy policy - not something I’d recommend because they’re useful to visitors. Those pages usually hoard 90% of a site’s PageRank. That means less PageRank for your article pages. Too little PageRank flowing downstream means supplemental results.

This isn’t about “hoarding” PageRank by not linking out or nofollowing every outbound or counting the number of links on a TBPR 6 directory page. It’s about channeling PageRank to article pages to lower the chance of them turning supplemental.

Second option, like I said, is to link to those fluff pages for usability sake but nofollow the links so you can save your PageRank for some other page that’s more important to you.

“Reminds me of newbies talking about pagerank hoarding, except you’re obviously not a newb and what you’re saying is clearly sophisticated.”

As Matt Cutts also explained recently, if your site is a TBPR 2 site (home page = TBPR 2), this tactic isn’t going to have much impact, because you don’t have much PageRank to play with. But if your site is a TBPR 7 site with thousands of pages, controlling PageRank flow can have a dramatic impact. How is that?

As Dan Thies recently explained, to rank high, you hunt for anchor text and a decent amount of authority (PageRank). You can hunt for targeted anchor text outside of your domain, which can be difficult via viral campaign and costly via paid link campaign and ineffective via recip link spam (if you don’t know when to say when). Instead, you gain authority (more link pop) via viral marketing, without concerning yourself with anchor text, use that authority to get thousands of pages indexed, and then use those pages to gain thousands of targeted anchor text. Think about some of the reasons why Wikipedia ranks so well.

My article explains how you can improve the depth of index penetration by using nofollow. Neither robots.txt/META noindex can help you do that because they don’t block PageRank. The goal here isn’t to hoard PageRank; you can’t create more PageRank by adding nofollow. You are just controlling where the PageRank goes and how much falls on each page.

Halfdeck said this on October 12th, 2007 at 7:53 am

[…] About two weeks ago I was browsing SEO-related sites and came upon a promising technique for getting one’s pages out of the supplemental index. It’s called “third level push” and looks particularily useful for blogs. This is an advanced technique and you should work on the basic stuff first before trying something like this. […]

Get Your Blog Out Of Supplemental Index (Maybe) | MT-Soft Website Development said this on November 3rd, 2007 at 10:25 am

[…] About two weeks ago I was browsing SEO-related sites and came upon a promising technique for getting one’s pages out of the supplemental index. It’s called “third level push” and looks particularily useful for blogs. This is an advanced technique and you should work on the basic stuff first before trying something like this. […]

ø Get Your Blog Out Of Supplemental Index (Maybe) | W-Shadow.com ø said this on November 21st, 2007 at 1:50 pm

Hiya,

Just wanted to leave you a note here as well…

I figured out how I copied PageRankBot results. The time it worked when I tried it, it was with a smaller site, on larger sites the Windows Clipboard can’t handle copying and pasting all the data. So here’s what you do:

Select a partial number of lines. (1000? Dunno the limits of clipboard memory)

Press Ctrl+C

Paste into notepad with Ctrl+V

Select from Notepad, copy, and paste into Excel.

Repeat.

Like I said, with a small site you can do this all in one copy/paste step, but with a larger site you need to space it out. There’s probably an easier way to do this (some clipboard program or bumping up the default memory for Ctrl C), but the good news is it works, and you can save the results into a spreadsheet!

chuckallied said this on November 21st, 2007 at 4:18 pm

Thanks chuckallied. Several people have requested CSV import feature, and though I can’t promise when it’ll be implemented, its definitely on my list of todos.

Halfdeck said this on November 21st, 2007 at 5:10 pm

[…] In many ways this technique is the opposite to 3rd level push, though the concepts are not mutually exclusive, as whilst you are diverting link juice from a 3rd level document to one on the second tier, that juice then flows evenly (if you want) to your 3rd level. […]

Optimizing HTML Links In The Aftermath Of A Blog Storm | Andy Beard - Niche Marketing said this on November 26th, 2007 at 9:24 am

[…] The more I look around the more I like the architecture. Actually, more correctly, the more I like the potential for a really good architecture. It’s just perfect for an internal linking strategy based on a slightly modified ‘Third Level Push’ (for a great rundown on this topic see Halfdeck’s post). The basic premise of third level push is that you push Pagerank down to your post pages and out on that tier, but not back up to either the adjacent category pages or the homepage. You do this by funnelling Pagerank. […]

The Importance Of Architecture and Messaging - Argolon.com | Search Engine Optimisation & Online Marketing Ireland .:. Red Cardinal said this on December 30th, 2007 at 9:39 am

[…] to a category in the main navigation. I don’t have tags - shock horror! Should I consider the third level push or silo my content […]

No Such Thing as Advanced White Hat SEO? | Hobo SEO UK said this on June 11th, 2008 at 6:48 pm

What about this scenario under the potential that Google says any no follow page rank will be lost not “sculpted”?

Another thought/question related to this. In reading earlier notes on the Google Algo, it said since pagerank is in effect “leaked” when you do off site links. It said then that external links should be kept to a minimum (I have software that measures these ratios for instance and now wonder if that’s even a necessary excercise). It also said that putting these links from as few pages as possible was preferred. Conversely, I have read that external links to authoritative sources increase your authority via theme relevancy. In what situations do you think each of these are true? Does containing outbound links to fewer pages help?

And given the above, even without retaining page rank by using no follow (if the current page rank/no follow changes are true), wouldn’t it be wise to no follow external links to avoid “leaking” your existing page rank? Would the above situation still justify the effort of no follow under the “new rules” if they turn out to be?

For example, We’re redoing some websites right now where we have many links for users to download say a flash player and was trying to decide whether to no follow them or not.

Rick Harris said this on June 9th, 2009 at 9:25 am

Rick, we don’t yet know exactly what’s happing now in regards to PageRank and nofollow.

PageRank does leak and if you have indexing problems you may want to keep your eyes on inbound/outbound ratios. It wouldn’t be high on my list of todos though - ROI would depend on the type of site you’re working on.

External links to authoritative pages does nothing to increase your authority. The only reason you might link to another authoritative page is to improve user experience.

Nofollowing outbounds to prevent leaking is not something I’d recommend. Nofollow if you’re selling links or if you’re referencing a page but not endorsing it. Nofollowing external links may not help you preserve PageRank under the new rules.

That said, one way to prevent PageRank leak is to rewrite links in javascript, so that people with js will see links while others without js (including SEs) will not. Not a zero risk tactic but should not be high risk since you’re not displaying different content based on user agent or IP.

Links to flash player download pages for me is borderline. Macromedia probably will never link back to me and they already have plenty of “votes” so I’m not really robbing them of anything by nofollowing links that point to their site. It depends on what type of site you’re dealing with - number of links, (real) PageRanks of the pages those links are on, the total number of links on each of those pages - but my guess is you’ll only see a marginal improvement if any.

Halfdeck said this on June 9th, 2009 at 5:10 pm